Getting started

This document describes the components of the ElcoreNN SDK.

ElcoreNN SDK is a set of tools for running neural network models on Elcore50 cores.

It consists of:

ElcoreNN (ElcoreNN DSP and ElcoreNN CPU pre-compiled libraries).

ElcoreNN CPU API headers.

Converters to convert models from ONNX and Keras to internal model format.

Examples of ElcoreNN CPU usage.

Converted models, video and images used by example applications.

Overview

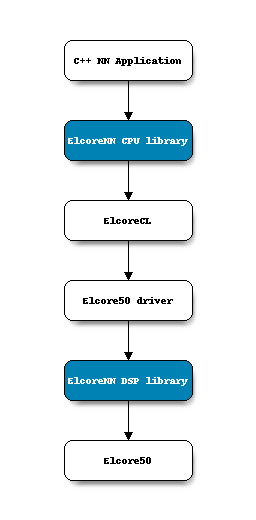

ElcoreNN is a neural network inference engine accelerated for Elcore50 (DSP). It consists of ElcoreNN DSP library and ElcoreNN CPU library.

ElcoreNN DSP library is a highly optimized library of fp16 neural network inference for Elcore50.

ElcoreNN CPU library allows to run NN application on Linux. It linked to ElcoreNN DSP library via ElcoreCL (OpenCL like library for Elcore50).

ElcoreNN CPU library provides a simple C++ API for loading and running neural networks models. ElcoreNN library uses ElcoreNN model format. You can convert your model from ONNX or Keras format to ElcoreNN model format using converters (see Converters).

Quick start

MCom-03 Linux SDK contains several examples of using ElcoreNN CPU API.

Examples are installed in MCom-03 Linux SDK, so you can run them out of the box.

There are source files to help you write your own application.

Go to mcom03-defconfig-src/buildroot/dl/elcorenn-examples/ to see sources.

There are instructions to run examples:

Follow the MCom-03 Linux SDK instructions to prepare your device and run Linux.

From Linux terminal on device go to

/usr/share/elcorenn-examplesfolder.Run script

prepare-examples-env.shto download models and examples data.Examples run several neural network models: MobileNet, ResNet50 and full-connected. Models were converted from Keras to ElcoreNN format.

Following examples are available:

sample-full-connectiveis a simple example of neural network that accepts input vector [1,32] multiplies it by 1 and returns output vector [1,32].To run example use command:

./sample-full-connective

Expected result:

Input: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 Output: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32

sample-resnetis an example of objects classification by ResNet50 model.Input data: Image file (JPG, PNG).

Output data: The result of the objects classification printing to stdout. A string contains a label of Top-1 class prediction from Imagenet dataset and the probability of the prediction.

To run example use command:

./sample-resnet ./data/media/bubble.jpg

You can use your image as input. Copy image on device and specify the path to image like above.

demo-classificationis a demo application of objects classification from video file using MobileNet model.The application uses GStreamer and VPU to decode the video stream and display prediction result on a monitor connected to device via HDMI. It also shows a prediction time for a single Elcore50 core.

Input data: Video file (format .h264).

Output data: Result of MobileNet prediction displayed on monitor.

To run example use command:

./demo-classification ./data/media/animals.h264

sample-yolov5is an example of objects detection by YOLOv5 model, pretrained on coco classes dataset.Input data: Image file (JPG, PNG).

Output data: Image file with bounding boxes of detected objects.

To run example use command:

./sample-yolov5 -i ./data/media/bicycle.jpg

You can use your image as input. Copy image on device and specify the path to image like above.

How to run a model

First you need to prepare your model and compile your program. These steps are performed on host machine.

Preparation (host)

Train the model using Keras or Pytorch or use pretrained model.

Save the model as Keras SaveModel format or ONNX format.

Convert model to ElcoreNN model format via Converters.

Compilation (host)

Write you C++ application using ElcoreNN API.

main.cpp#include "elcorenn/elcorenn.h" int main() { // Init ElcoreNN resources InitBackend(); // Load model from files auto id = LoadModel("path-to-model-json-file", "path-to-weights-bin-file"); float* input_data; float* output_data; uint32_t batch_size; // Allocate input_data and output_data and fill input_data and batch_size // ... // batch_size is a batch dimension value in input_data, output_data // Prediction float* inputs[1] = {input_data}; float* outputs[1] = {output_data}; InvokeModel(id, inputs, outputs, batch_size); }

Link ElcoreNN library.

Create CMakeLists.txt file.

project(my-app) cmake_minimum_required(VERSION 3.0) find_package(elcorenn REQUIRED) add_executable(my-app main.cpp) target_link_libraries(my-app elcorenn)

Cross-compile program using aarch64-buildroot-linux-gnu_sdk-buildroot provided with MCom-03 Linux SDK.

Unpack aarch64-buildroot-linux-gnu_sdk-buildroot.tar.gz archive and go into folder.

Run commands to relocate sdk path and setup environment:

./relocate-sdk.sh source environment-setup

Go back to folder with your code and run commands:

mkdir build && cd build cmake .. -DCMAKE_BUILD_TYPE=Release -G "Unix Makefiles" make

Running model (mcom03)

Open terminal on host machine connected to device:

# connect host machine to board using UART and run # run command on host machine minicom -D /dev/ttyUSBX # where X - number of USB device

Run Linux on device.

Copy executable file to device:

scp ./my-app root@[device-ip-addr]:/root # use ifconfig from minicom terminal to view ip-addr

Execute application:

# from device terminal run cd /root ./my-app